ChatGPT vs Aptitude Tests: Can Generative AI Outperform Humans?

Wednesday 30th August

For months, the recruitment community has been buzzing with rumours: can candidates with little or no specialist training really use generative AI to complete psychometric tests?

With so much speculation, we decided it was time to separate fact from fiction.

Over the past month, one of our Senior Data Scientists has been working tirelessly with a team of postgraduates from UCL to meticulously evaluate ChatGPT and its performance across four key test types.

Our first deep dive? Aptitude Tests.

Across the course of this article, we’ll share our research methods and our results.

But in case you just wanted the headlines…

TL;DR –– ChatGPT vs Aptitude Tests

- Candidates with little to no training can use ChatGPT to achieve above-average scores on Verbal Reasoning Tests.

- ChatGPT-4, which is behind a paywall, scores much higher than GPT-3.5, which is free –– potentially setting back social mobility work years.

- ChatGPT does not significantly enhance scores on Numerical Reasoning Tests and may even be detrimental in most cases.

- Recruiters may see an increase in the number of candidates passing Verbal Reasoning Tests, but a reduction in the quality of candidates at the interview stage (because their scores don't match their capability).

- As tools like ChatGPT handle basic reasoning, TA teams should consider if current testing methods evaluate the cognitive skills really required in modern workplaces or if we need to start thinking differently about selection.

The context

According to research, one of the best ways to predict candidates’ future job performance is to measure their cognitive ability (Schmidt and Hunter, 1998; Kuncel et al., 2004; Schmidt, 2009; Wai, 2014;Schmidt et al., 2016;Murtza et al., 2020).

And the best way to measure cognitive ability has historically been with a traditional Aptitude Test. These tests tend to ask questions or present problems where the answer is either right or wrong – this is what we’d call maximum performance. They paint a picture of what people can do, but not necessarily how they do it. These tests have, for a long time, been well-regarded as a reliable assessment method, with no easy way to cheat (outside of using practice tests from the likes of Assessment Day or following the advice shared on sites like YouTube and Reddit).

But in the last year, Generative AI turned the theory that Aptitude Tests were ‘un-gameable’ on its head. Tools like ChatGPT exploded onto the market, with their ability to interpret, predict, and generate accurate text responses. Speculation became rife that these tools may now have the capability to analyse test questions and generate optimal answers –– even without any detail on the methodology used to build them or formulate the right answer.

Even the Microsoft Researchers who developed ChatGPT’s latest version, GPT-4, suggested its reasoning capability is better than that of an above-average human. So it’s no surprise that many TA leaders began to speculate.

This leads us back to the big question –– Can generative AI really be used by candidates to complete psychometric tests, with little or no specialist training?

To find out, our research squad tested ChatGPT against a retired version of text-based Verbal and Numerical Reasoning assessments that we used until a few years ago. They are cornerstones of standard Aptitude Testing and have been validated using standard psychometric design principles and best practices.

They were also used in multiple early career campaigns across many different sectors, giving us solid comparison data across a sample size of 36,000. While these assessments are retired, the question-based format is still identical to the majority of Reasoning Tests currently on the market (we checked).

From here on out, we’ll explain both our research process and the results. Plus, we’ve also created a downloadable drill-down into the data if you’re interested in diving deeper.

Explaining the Questions to ChatGPT

To see if ChatGPT could complete these tests, we first needed to explain the questions to the Generative AI model in the same way that a candidate would. There are many different ways of framing information for ChatGPT, and this is commonly known as ‘prompting’.

After a lot of research, we settled on five distinct ChatGPT prompting styles to investigate. These are some of the most accessible styles, which any candidate can find online by Googling “best prompting strategies for ChatGPT”. These were:

- Base Prompting: The most basic approach, which involves simply giving ChatGPT the same basic instructions a candidate receives within the assessment

- Chain-of-Thought Prompting: The next level up from Base Prompting gives ChatGPT an example of a correctly answered question within the prompt (using an example practice question from a testing practice website)

- Generative Knowledge Prompting: As an alternative approach, this prompting style asks ChatGPT to explain the methodology it will use before it answers the question. This gives the candidate the chance to train ChatGPT briefly.

- Persona / Role-based Prompting: ChatGPT has the capability to take on a persona so it can be instructed to assume a role like “You are an experienced corporate analyst at a large financial services organisation” –– this prompting style encourages ChatGPT to think about its role as it crafts its answers.

- Vocalise and Reflect Prompting: This style involves asking ChatGPT to create a response, critique that response, and then iterate on the original draft based on its own critique - a self-learning type of approach.

Comparing the GPT Models

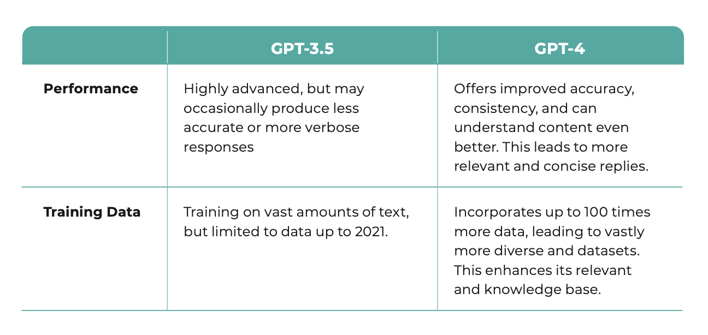

Next up, we needed to decide whether to compare the two different versions of ChatGPT.

GPT-3.5 is currently free. As a result, it’s much more widely used than GPT-4, which sits behind a paywall for $20 / £15 a month. Yet GPT-4 has some enhanced features that we believe could give candidates an additional advantage in completing an Aptitude Test (assuming they have the financial means to pay for it):

Given the potential for different outputs from the two available models, we decided to test both and compare the scores to those achieved by the average human candidate completing the same Aptitude Test.

Tackling timing

Having made these decisions, our research team began testing ChatGPT using a standardised approach through its API. It’s worth noting that some Aptitude Tests operate within time limits –– in the early stage of our research, we observed that the speed at which we were able to craft a prompt and get a response from ChatGPT meant that timing our tests wasn't necessary, because ChatGPT would always be able to respond almost as fast a candidate.

Can ChatGPT beat humans at Verbal Reasoning?

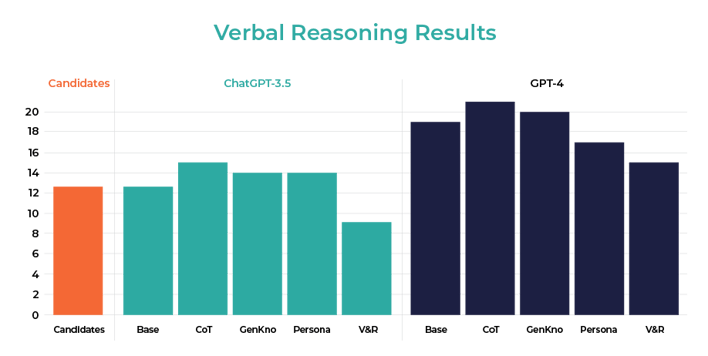

The results for Verbal Reasoning were nothing short of amazing: almost all of the prompting styles beat the average for human candidates. Of course, this varied by degrees of magnitude, depending on the prompting style, and whether we were using the free or paid versions of ChatGPT.

GPT-4 with Chain of Thought prompting achieved an astounding score of 21/24, better than 98.8% of human candidates, across a sample size of 36,000 people.

As you can see, GPT-4 performed much more strongly than its predecessor, GPT-3.5, although GPT-3.5 was still able to score higher than the average candidate using 3 out of 5 prompting styles –– suggesting that candidates using ChatGPT to complete Aptitude Tests will have an advantage over their peers and potentially be able to mask their true verbal reasoning ability.

Our analysis also revealed that the 'Chain of Thought' and 'Generative Knowledge’ prompting strategies were most effective. For the Chain of Thought prompting style, this is likely because giving ChatGPT an example of a correct answer helps it learn how to structure its responses better. Meanwhile, Generative Knowledge prompts ChatGPT to break down the problem into simple steps (just like a human might), which makes the problem easier to solve –– in this example, it’s likely also using the large amount of information available online about Aptitude Tests and how they work to inform its decision making.

Examples of the prompting styles used to complete the Verbal Reasoning Test are detailed below.

- Example Verbal Reasoning Question:

A mentoring programme has recently been established at a creative marketing agency. All current employees are either team members, team leaders, or department managers. The motivation behind establishing the programme is primarily to increase the frequency and clarity of communication between employees across various roles in order to enhance innovative output through collaboration while providing the additional benefit of deepening employees' understanding of career progression in the context of individual and collective competencies and skill gaps. All team members have a designated mentor, but they are not permitted to act as a mentor for another employee, and all department managers have one designated mentee.

Based on the above passage, which of the following statements is true:

- All employees are a mentor

- All employees are a mentee

- All employees are a mentor and a mentee

- Cannot Say

Chain-of-Thought Example Prompt:

- You will be shown passages of text and statements relating to them.

- You must identify whether or not each statement is accurate, according to the passage of text.

- If, according to the text, the statement is true, answer true. If it is false according to the text, answer False.

- If the text doesn’t provide enough information to determine whether the statement is true or false, answer Cannot Say.

- For each statement, there is only one correct answer. Here is an example:

- [Add in Example]

- Work out the answer step by step, and then write your final answer delimited within curly brackets.

- You will be doing a verbal reasoning test, in which you will be shown passages of text, and statements relating to them.

- You must identify whether or not each statement is accurate according to the passage of text.

- If, according to the text, the statement is true, answer True. If, according to the text, it is false, answer False.

- If the text doesn't provide enough information to determine the statement to be true or false, answer Cannot Say.

- For each statement, there is only one correct answer.

- Before you answer, please outline in detail the methodology for completing such tests accurately.

Persona Example Prompt:

- You are an experienced corporate analyst at a large organisation, known for your meticulous attention to detail and your ability to make accurate judgments based on documents and reports.

- Today, you've been given a new report to review: You will be shown passages of text, and statements relating to them.

- You must identify whether or not each statement is accurate, according to the passage of the text.

- If, according to the text, the statement is true, answer True. If, according to the text, it is false, answer False.

- If the text doesn't provide enough information to determine the statement to be true or false, answer Cannot Say.

- For each statement, there is only one correct answer.

- You will be shown passages of text, and statements relating to them. You must identify whether or not each statement is accurate, according to the passage of text. If, according to the text, the statement is true, answer True. If, according to the text, it is false, answer False. If the text doesn't provide enough information to determine the statement to be true or false, answer Cannot Say. For each statement, there is only one correct answer.

- Here is an example: [Enter example passage]

- Work out the answer step by step, and then write your final answer delimited within curly brackets

As you can see, it’s not that hard to set up these prompting styles; and they don't differ hugely from the types of 'how to complete the assessment' videos we already see candidates create on sites like TikTok and Practice Aptitude Tests.

What about Numerical Reasoning?

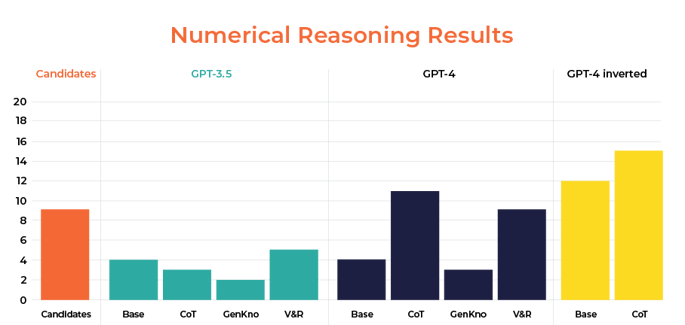

On the flip side, Numerical Reasoning posed much more of a challenge for both GPT models, which makes sense given it’s designed to work with language more than complex numerical reasoning. All five prompting styles scored well below the human average using GPT-3.5, while two of the prompting style scores were able to perform slightly above average on GPT-4.

Despite the improvement, even the best-performing combination of Chain-of-Thought Prompting with GPT-4, struggled with more complex number sequences.

While ChatGPT didn’t perform that well here, there are a whole host of new browser plug-ins (apps you can install, e.g. Google Chrome to appear as an ‘overlay’ on your browser) emerging all the time, that we can expect to eventually get better at solving these types of tests. You can read a bit more about a few of them here. We tested one plug-in, Wolfram Alpha, but got the following response:

“I'm sorry, but it seems that Wolfram Alpha could not find a rule governing the sequence [9, 15, 75, 79, 237]”

This suggests that right now, these types of plug-ins aren’t where they’d need to be yet to complete a numerical reasoning test (although that’s not to say they won’t be soon based on how quickly Generative AI technology is advancing).

3 key takeaways on how ChatGPT performs in Aptitude Testing

- It’s clear that candidates with minimal training can now use ChatGPT to achieve scores much higher than the human average on Verbal Reasoning Tests. What’s more, if they have the financial means to pay for GPT-4, their scores will be significantly higher than the average candidate, regardless of prompting style or effort. This has the potential to make the gap between candidates of different socioeconomic means even greater.

- Conversely, using ChatGPT to improve scores on Numerical tests is unlikely to be that useful at this stage. We could expect a select few candidates using GPT-4 to exceed average scores, but in most cases, the use of ChatGPT will hamper rather than enhance their overall results.

- This leads us to conclude that the tests most likely to be negatively impacted by ChatGPT are language-based ones, like Verbal Reasoning. It’s worth noting that beyond Verbal Reasoning, the most commonly used language-based tests are Situational Judgement Tests. These are the subject of our next research piece which we’ll be publishing in the coming weeks.

3 repercussions for recruitment

So how do these findings affect the hiring process?

We believe this research presents three primary considerations for TA teams using traditional, text-based Aptitude Tests:

- An increase in pass rates, but a drop in candidate quality: Aptitude Tests are designed to sift and select candidates based on the required level of cognitive ability for a role. However, given how easy it would now be for a candidate to use ChatGPT to complete a text-based Verbal Reasoning Test and achieve an above-average score, TA teams could have a big challenge on their hands in a very short space of time.

Very quickly, these tests could cease to be a useful tool for saving recruiter time and in fact increase time to hire, as a result of recruiters having to waste time interviewing candidates who aren’t actually the right fit for the role. There is also a risk that TA teams won’t actually sift in the candidates who actually have the right capability because their scoring system is no longer effective. The consequences of this could range from damaging the reliability of your recruitment process to losing the hiring manager’s faith in the efficacy of the first sift. - Missed diversity goals: The difference in results on Verbal Reasoning between GPT-3 and GPT-4 was pretty significant. But with GPT-4 sitting behind a paywall, candidates with the financial means to pay for it will suddenly have an advantage over those who don’t — potentially setting back all of the hard work TA teams have done over the past few years to try and increase diversity and social mobility.

- Reassessing intelligence in the workplace: If a tool like ChatGPT can reason as well as or better than the average candidate, is this form of reasoning really the best thing for us to assess? In a world where Generative AI tools like ChatGPT can take care of basic reasoning, help employees go faster, and automate manual processes, the real value of having humans in a role is to make sure they can do more complex problem solving, and use high levels of learning agility to help them work out how to embrace new technology like Generative AI.

This poses a big question – are Verbal and Numerical Reasoning Tests really the best way to assess the type of cognitive ability we need today? Or instead, should we be thinking harder about what we need to assess every candidate at every level?

In light of these findings, and given the knowledge that candidates are already using ChatGPT to game traditional assessments, talent acquisition leaders must decide how they’ll tackle this game-changing development and do it quickly.

The best ways to tackle this problem

TA leaders have three options available to them to tackle this challenge.

- Detect - Continue using traditional, text-based Aptitude Tests, but try to use additional tools to detect if candidates are using Generative AI to complete them. The challenge with this approach is that no ChatGPT detection models have been shown to work effectively as of today, with some even reporting that 2 in 10 times these detection methods produce a false positive, meaning you risk falsely accusing candidates of cheating, potentially harming your employer brand. It’s also worth noting that given how quickly the underlying language models change and improve, there’s a chance these detection methods could quickly become out of date.

- Deter - Attempt to prevent candidates by using (already unpopular) methods like video proctoring, which have been around for several years but received strong pushback from candidates. These methods are also likely to increase candidate anxiety, shrinking your talent pool further (Hausdorf, LeBlanc, Chawla 2003). Simpler methods like preventing multiple tabs from being open at a time are an option, but even these can be easily bypassed with a phone. For example, candidates can use an iPhone to scan text as an image, convert it to text, and then upload it to ChatGPT, in just a few seconds.

- Design - Look for a different way to test cognitive ability designed to help us assess how a candidate really thinks and learns at work and in a way that cannot be completed by ChatGPT. The starting point is to choose an assessment type that isn’t based on language, and so can’t easily be completed by large language AI models like ChatGPT. Of course, we’re biased on this one, but based on our research, interactive, task-based assessments currently prove much more robust and less susceptible to completion by ChatGPT because they create scores based on actions, not language.

Where to go from here

An ISE study showed that almost half of students would use ChatGPT in an assessment, suggesting the imperative to review your approach to aptitude testing is urgent.

We’re continuing to explore the impact of ChatGPT across the wider assessment industry, and over the next three weeks, we’ll be sharing our research on ChatGPT vs. Situational Judgement Tests, Question-Based Personality Tests, and Task-Based Assessments.

We recently created a new report with insights generated from a survey of 2,000 students and recent graduates, as well as data science-led research with UCL postgraduate researchers.

This comprehensive report on the impact of ChatGPT and Generative AI reveals fresh data and insights about how...

👉 72% of students and recent graduates are already using some form of Generative AI each week

👉 Almost a fifth of candidates are already using Generative AI to help them fill in job applications or assessments, with increased adoption among under-represented groups

👉 Candidates believe it is their right to use Generative AI in the selection process and a third would not work for an employer who told them they couldn't do so.

Sign up for our newsletter

Join over 2,000 disruptive TA leaders and get insights into the latest trends turning TA on its head in your inbox, every week

Read Next

Sign up for our newsletter to be notified as soon as our next research piece drops.

Join over 2,000 disruptive TA leaders and get insights into the latest trends turning TA on its head in your inbox, every week

Sign up for our newsletter to be notified as soon as our next research piece drops.

Join over 2,000 disruptive TA leaders and get insights into the latest trends turning TA on its head in your inbox, every week